Submission on the Final Report of the ACCC Digital Platforms Inquiry

Reset Australia formerly operated under the name Responsible Technology Australia.

To: The Treasury - The Australian Government

From: Responsible Technology Australia

Responsible Technology Australia (RTA) would like to thank the Australian Government for the opportunity to respond to the final report from the ACCC Digital Platforms Inquiry. Additionally we would like to take this opportunity to congratulate the ACCC on a thorough and impressive investigation into digital platforms in Australia.

Who We Are

RTA is an independent organisation committed to ensuring a just digital environment. We seek to ensure the safety of Australian citizens online whilst advocating for a free business ecosystem that values innovation and competition. In particular, we are concerned with the unregulated environment in which digital platforms currently exist and advocate for a considered approach to address issues of safety, democracy and to ensure economic prosperity.

1.0 EXECUTIVE SUMMARY

In response to the ACCC Digital Platforms Inquiry Final Report, and elaborated further below, the RTA:

- broadly agrees with the 23 recommendations set by the Final Report, in particular commending the strong stance on consumer privacy and the recommendation to implement a harmonised media regulatory framework

- encourages the exploration to expand the remit and powers of the Independent Regulator set forward in Recommendations 14 & 15 (most likely ACMA) to also investigate and combat potential societal harms the digital platforms might cause

- recommends that this Independent Regulator should be immediately empowered to take on key additional roles and responsibilities, in particular;

- Initiating an Inquiry into the potential harm that advertisers could cause to individuals, society and/or the democratic process in Australia, through the use of the digital platforms

- Proactively auditing the content algorithms amplify and/or recommend to users, focusing on the spread of harmful or divisive content

Whilst it was noted in the ACCC Final Report that “other important concerns, including the role of digital platforms in promoting terrorist, extremist or other harmful content and how social media is used for political advertising, are outside the scope of this Inquiry”, we believe that it is impossible to look at the digital platform landscape in Australia as it impacts advertising and the media sector in isolation without also addressing how these platforms structurally manifest broader societal issues. The advent of these digital platforms have resulted in these issues being intimately linked - therefore it is practical and necessary to address combatting these harms as part of a holistic regulatory approach.

2.0 INDEPENDENT REGULATOR - THE CASE FOR AN EXPANDED REMIT

2.1 Context

The business model of these digital platforms is built on the capitalisation of user data and attention. As consumers provide the platform with their data and attention, these platforms can monetise this information for advertisers, this is known as the ’attention economy’.

The current limitless practice of data collection of personal information builds profiles on users down to their vices, interests and vulnerabilities. This enables people to be intimately targeted by both the platform’s algorithms and advertisers to serve them content that is better geared to keep them engaged. The way this manifests into negative societal effects is diverse, from undermining our democratic process, facilitating home-grown extremism to promoting anti-vaccination voices.

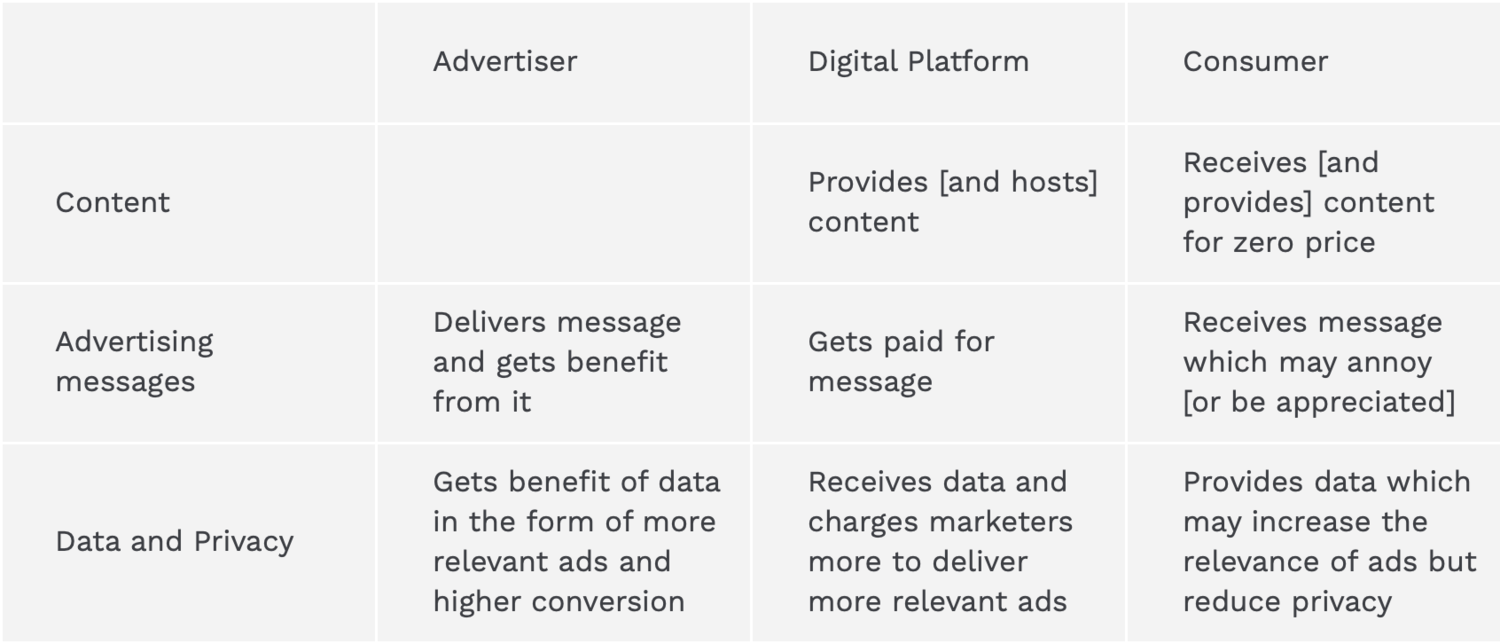

Table 1: Economic Deal between Advertiser, Digital Platform and Consumer, adapted from the ACCC Inquiry on Digital Platforms Final Report pg 376

Table 1: Economic Deal between Advertiser, Digital Platform and Consumer, adapted from the ACCC Inquiry on Digital Platforms Final Report pg 376

On the advertising messages side of the platform (refer to Table 1)

Whilst data collection allows the advertising sector to serve more personalised and relevant content to users, this unfettered collection has amassed a data set that allows for the direct and granular targeting of consumers down to using key emotional trigger points and personal characteristics in order to drive engagement. The potential negative effects of this to society has already been seen elsewhere in the world, as seen in the targeting of UK voters with misinformation campaigns during the Brexit campaign.

On the content side of the platform (refer to Table 1)

As these platforms are designed to monetise the number of time consumers spend on them, they are incentivised to serve material which keeps users on the platform. Hence algorithms feed users tailored content that is calculated to have the greatest potential of keeping users engaged, which tends to be the more extreme and sensational content as it has been shown to be more captivating.

This prioritisation results in a proliferation of extreme and sensational content, while also enabling actors to game the system by manufacturing content with the intent to cause outrage. This has been seen in Twitter bots and Russian trolls amplifying the anti-vaccination rhetoric and recently in Australia with extremist and manipulative content being pushed from scammers from Kosovo.

“Greater User Engagement Logically Leads To Greater Opportunities For Advertising Revenue For Digital Platforms.”

- The impact of Digital Platforms on News and Journalistic Content, Centre for Media Transition

2.2 Previous Efforts And Misalignment

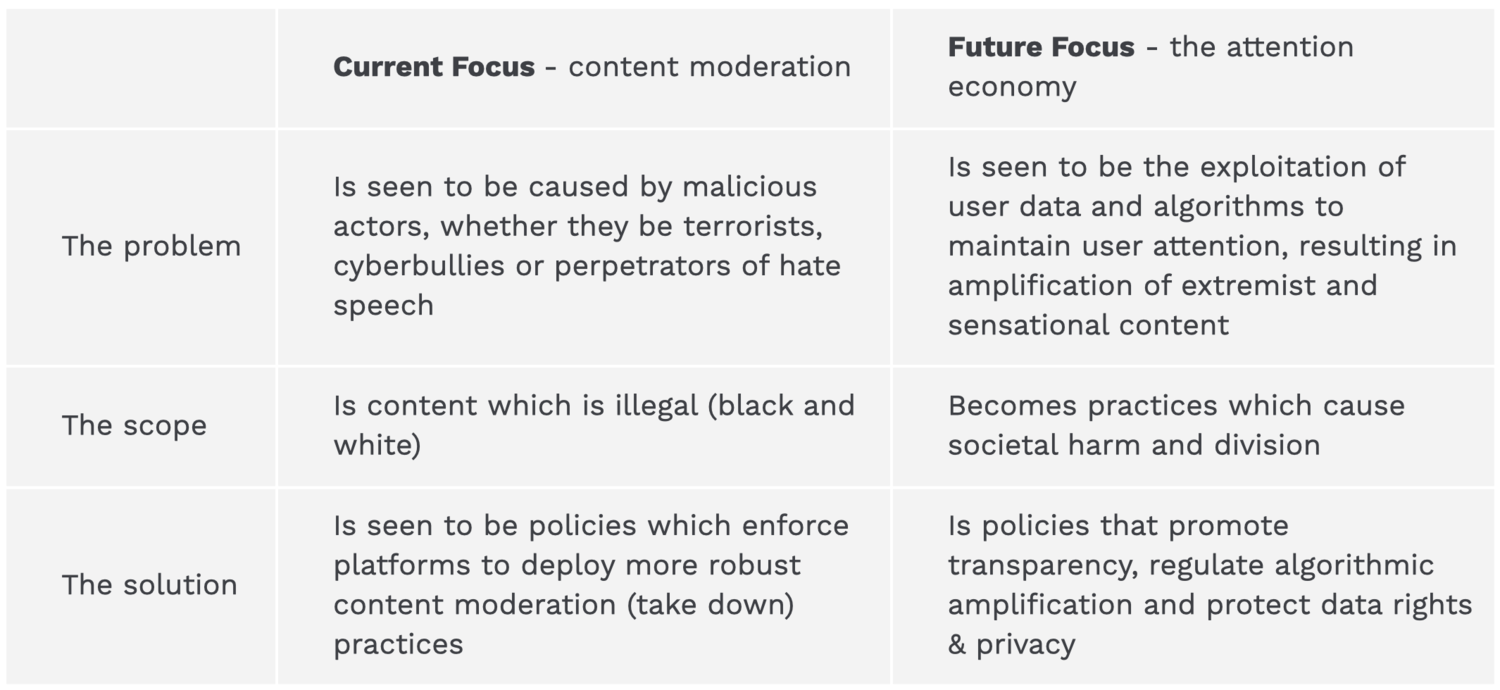

Australia’s existing policies to address these societal harms, such as via the eSafety Commissioner or the Abhorrent and Violent Materials Act, have been focused on content moderation rather than addressing the structural causes.

While these policies are important and allow us to deal with illegal content, they leave us playing catch-up and trying to mitigate their harm once they’ve already been amplified to Australian users. Further, these policies are not adaptive enough to the types of content - such as unduly polarising, hateful or misinformative content - that is legally allowed but causes significant harm by inciting hate or distorting the truth on important issues.

Whilst content moderation policies are an avenue to mitigate some of the worst of these harms, they are ill equipped to regulate the profit model of these platforms that exploit user attention and drive vast profit through serving harmful disinformation via algorithms and advertisers.

We hope that with this submission and the work of the RTA, we can begin the conversation of bringing about a shift in our regulatory focus, to address these issues holistically.

Table 2: Transition from content moderation focussed policies to a regulatory environment that focuses on the attention economy

2.3 Building On The ACCC Final Report

As stated in Chapter 6 of the Final Report, the ACCC has acknowledged many of the potential harms caused by digital platforms within the context of harmful material being presented as news or journalism. These included:

- Algorithmic curation and user behaviour on social media have the potential to cause ‘echo chamber’ and ‘filter bubble’ effects

- Algorithms presenting increasingly extreme and ‘outrageous’ content in order to maximise user engagement

- The threats caused by unreliable information or ‘information disorder, including ‘disinformation’, ‘misinformation’ and ‘malinformation’, which are particularly hard to identify on social media where news is often presented alongside content

- The financial incentive for the creation and spread of disinformation, ‘clickbait’ and ‘sensationalist political content’ linking to websites which seem legitimate but are actually farms

In the Final Report, the ACCC indicates that the issues around plurality of the news are potentially magnified online and users may be at risk of greater exposure to filter bubbles. The recommendation in the Final Report is that this does not yet warrant any direct Government action as the mechanisms under Recommendation 14 and 15 - which include the monitoring of voluntary credibility signals by the platforms and providing a platform to record and respond to complaints about disinformation - will be enough for the Government to gather evidence on the nature and extent of harm caused by these platforms in Australia.

Our view is that the passive forms of regulation and evidence collection outlined in Recommendation 14 and 15 will not be sufficient to gather data on the nature and extent of harm, for the following reasons:

- Users will be unlikely to report misleading content. The ACCC Consumer Use of News survey found that whilst 70% of Australian adult news consumers reported that they had experienced mistakes and/or inaccuracies in their news, only 6% had lodged a formal complaint.

- There is high potential for harmful content to spread and be understood as the truth. Australian digital literacy is low, and only one-third of the population check the veracity of news7, whilst it has been shown that untruthful content spreads faster than truthful content.

- Personalised targeting limits the ability to respond to reports. The opaque nature of how algorithms curate and target specific user segments by advertisers will make it difficult to ascertain the extent and severity of consumer complaints about disinformation made to the independent regulator.

In addition to this, there is a high level of consumer concern over the extent of unreliable news, with around 92% of respondents to the ACCC Consumer Use of News survey having some concern about the quality of journalism they were consuming7. The 2019 Digital News Report found that 62% of Autralian news consumers had a high level of concern about the veracity of online information, well above the global average of 55%.

This disconnect between high consumer concern and low consumer action should be an impetus for Government to push for more proactive regulation.

Based on the reasons above, our recommendation is that the independent regulator should take on a proactive, rather than reactive approach to gathering the evidence base for disinformation, malinformation and misinformation. The independent regulator should be given powers and resources to investigate the spread of these harms by exploring how both the algorithms and ad platforms facilitate the spread and amplification of disinformation and misinformation, the amplification of extreme content, and the creation of ‘filter bubbles’.

3.0 RECOMMENDATIONS

Our view is that future regulatory approaches should be able to adapt to the rapidly evolving landscape of these platforms. This approach should grant regulators the oversight they need to understand the ways in which algorithms and ad systems amplify or incentivise problematic content, to ensure the safety of our citizens and minimise harms to our society at large.

Within the Final Report, it was identified that the nature and extent of how these ‘filter bubbles/echo chambers’ cause harm within Australia is unclear, and that mechanisms need to be in place to begin to build this evidence base. As mentioned in this submission, we have already started seeing instances of this happening overseas, such as in the 2016 US Presidential Election and the Brexit campaigns, thus it is our strong opinion that the Government should take on an active approach to gather this evidence to inform more considered future regulation.

Our following recommendations have been made with the intention to set up the infrastructure to develop this evidence base.

3.1 Expanding The Remit Of An Independent Regulator, Such As The ACMA Or E-Safety Commission To Proactively Investigate And Monitor The Impact Of Digital Platforms On Society.

Extending beyond the scope of _Recommendation 14: Monitoring efforts of digital platforms to implement credibility signallin_g and 15: Digital Platforms Code to counter disinformation as defined in the Final Report, these expanded powers would begin to provide the structure needed to investigate potential societal harms these digital platforms might have.

How this could be implemented:

Establish a specialist digital platforms taskforce/branch within the independent regulator focused on issues of societal harm through algorithmic manipulation and the digital platforms advertising systems. This taskforce would provide the architecture necessary to investigate further into potential issues, begin proactively collecting an evidence base and provide recommendations for future policy and regulatory directions.

3.2 Conduct An Inquiry Into The Potential Harm That Advertisers Could Cause To Individuals, Society And/Or The Democratic Process In Australia, Through The Use Of The Digital Platforms

This Inquiry should explore several key areas such as;

- Reviewing the potential for social, emotional and political manipulation via digital platforms ad systems, including investigating previous and existing international cases (e.g. Brexit) as well as understanding the scale and depth of the data points available for advertisers to use for targeting

- Determining the level of risk Australia faces when advertisers leverage user data to manipulate public sentiment and influence political outcomes, as demonstrated through the Cambridge Analytica scandal

- Reviewing the data sets of Digital Platforms’ advertising partners (such as Experian and Quantium) including the data points and customer segments made available to advertisers, as well as the volume of Australians on their lists

- Recommending proposed changes to the advertising process to minimise the potential for harms

How this could be implemented:

The taskforce established within the independent regulator would be briefed to conduct an investigation over several months into the potential mechanisms in which the advertising functions on digital platforms could be exploited by malicious actors.

This would require the cooperation of both the digital platforms and advertising partners to identify vulnerabilities, including the extent of data targeting available, the identification verification process, the advertising content checks and restrictions, and other procedures set forward by digital platforms and their partners. The outcome of the inquiry would be a set of recommendations for specific platforms to strengthen their advertising systems.

3.3 Ongoing And Proactive Auditing Of The Content That Algorithms Amplify To Users, Focusing On The Spread Of Harmful Or Divisive Content

These expanded responsibilities of the regulator will begin to build an evidence base on the impact on Australian society of how algorithms prioritise and distribute certain content, in order to inform future regulation. This should focus on (but not be limited to) the following:

- Nature of age appropriate content delivery to children, including violent and sexual material

- Investigate the nature of algorithmic delivery of content which is deemed to be fake news or disinformation

- Audit the extent of algorithmic delivery on the diversity of content to any given user - to investigate filter bubbles

- Audit of the amplification of extremist or sensationalist content by these algorithms

How this could be implemented:

Algorithmic audits of these platforms would need to be determined in collaboration with the appropriate relevant companies. A potential mechanism that could be set up might include avenues in which platforms self-publish what content is being amplified and served in Australia by these algorithms in real-time allowing the regulator to focus on the outcomes of the algorithms rather than the design of the algorithm itself.

This would allow for the mitigation of two key concerns of the platforms:

- The inherent sensitivity of allowing external scrutiny of algorithms that are the intellectual property of private enterprises and represent significant trade secrets

- The algorithms code itself is unlikely to provide insights to the type of content surfaced as algorithms are configured for engagement, and are indiscriminate as to the ‘nature’ of the content users are engaging with, in addition algorithms code is constantly changing the weight they give different signals resulting in any understanding gained become quickly outdated.

This mechanism would allow an independent regulator to gather evidence required to assess whether news and other content being recommended by algorithms is in line with societal expectations, or whether actions need to be taken by the platforms to tweak their algorithms to ensure content appropriateness, quality and diversity in line with our media regulation frameworks. A method to explore modelling algorithmic auditing is available at algotransparency.org, which provides a snapshot of the videos recommended on Youtube.

4.0 CONCLUSIONS

RTA acknowledge the scale of the task ahead to begin to adequately regulate these digital platforms and mitigate the societal harms they inadvertently cause. We look forward to working together to bring about the best outcomes for businesses, consumers and society at large.

Should the Government have any further questions or require further information, we would be happy to engage further.

5.0 REFERENCES

- Scott (30 July 2019), Cambridge Analytica did work for Brexit groups, says ex-staffer, Politico found at: https://www.politico.eu/article/cambridge-analytica-leave-eu-ukip-brexit-facebook/

- BBC News (26 July 2018), ‘Vote Leave’s targeted Brexit ads released by Facebook’, https://www.bbc.com/news/uk-politics-44966969

- Vosoughi et al. (2018), ‘The spread of true and false news online’, Science found at: https://science.sciencemag.org/content/359/6380/1146

- Nicas (2 Feb 2018), ‘How YouTube Drives People to the Internet’s Darkest Corners’, Wall Street Journal found at: https://www.wsj.com/articles/how-youtube-drives-viewers-to-the-internets-darkest-corners-1518020478

- Broniatowski et al. (2018), ‘Weaponized Health Communication: Twitter Bots and Russian Trolls Amplify the Vaccine Debate’, Am J Public Health found at: https://www.ncbi.nlm.nih.gov/pmc/articles/PMC6137759/

- Workman and Hutcheon (16 March 2019), ‘Facebook trolls and scammers from Kosovo are manipulating Australian users’ ABC News found at: https://www.abc.net.au/news/2019-03-15/trolls-from-kosovo-are-manipulating-australian-facebook-pages/10892680

- Aus Government; eSafety Commissioner found at: https://www.esafety.gov.au/complaints-and-reporting/offensive-and-illegal-content-complaints/the-action-we-take

- Australian Parliament (2019), Criminal Code Amendment (Sharing of Abhorrent Violent Material) Act 2019, No. 38 retrieved from: https://www.legislation.gov.au/Details/C2019A00038

- Roy Morgan (2018), ‘Consumer Use of News’ prepared for the ACCC found at: https://www.accc.gov.au/system/files/ACCC%20consumer%20survey%20-%20Consumer%20use%20of%20news%2C%20Roy%20Morgan%20Research.pdf

- Vosoughi et al. (2018), ‘The spread of true and false news online’, Science found at: https://science.sciencemag.org/content/359/6380/1146

- Fisher et al. (2019) ‘Digital News Report: Australia 2019’ found at: https://apo.org.au/node/240786

- Kelly et al. (22 Aug 2018), ‘This is what filter bubbles actually look like’, _MIT Media Review f_ound at: https://www.technologyreview.com/s/611807/this-is-what-filter-bubbles-actually-look-like/